In recognition of disinformation’s prevalence and potential to cause harm, many social media platforms have taken actions to limit, remove, or combat both disinformation and misinformation in various ways. These responses are typically informed by platforms’ policies regarding content and behavior and mostly operationalized by product features and technical or human intervention. This section examines the variety of approaches companies have taken to address digital disinformation on their platforms.

Sometimes the moderation of content on social media comes under the pretense of combating disinformation when actually it is in service of illiberal government objectives. It is important to note that platforms controlled by companies in authoritarian countries often remove disinformation and other harmful content This can raise important censorship concerns particularly when the harm is defined as criticism directed toward the government under which the company operates.

(A). Platform Policies on Disinformation and Misinformation

A handful of the world’s largest and most popular social media companies have developed policies and community standards to address disinformation and misinformation. This section examines some of the most significant private sector policy responses to disinformation, including from Facebook, Twitter, and YouTube, as well as growing companies such as Tik Tok and others.

1. Facebook Policies

At Facebook, user activities are governed by a set of policies known as Community Standards. This set of rules does not presently ban disinformation or misinformation in general terms; however, it does feature several prohibitions that may apply to countering disinformation and misinformation in specific contexts. For example, the Community Standards prohibit content that misrepresents information about voting or elections, incites violence, promotes hate speech, or includes misinformation related to the Covid-19 pandemic. Also, the Community Standards prohibit “Coordinated Inauthentic Behavior,” which is defined to generally prohibit activities that are characteristic of large-scale information operations on the platform. Once detected, networks participating in coordinated inauthentic behavior are removed. Also, Facebook has begun to develop policies, engaging with experts, and developing technology to increase the safety of women on its platform and their family of apps. Rules against harassment, unwanted messaging, and non-consensual intimate imagery that is disproportionally targeted towards women are all part of Facebook’s efforts to make women feel safer. However more work still needs to be done, as the burden often falls on women to report abuse and manage their safety on the platform.

Outside of these specific contexts, Facebook’s Community Standards include an acknowledgment that while disinformation is not inherently prohibited, the company has a responsibility to reduce the spread of “false news.” In operationalizing this responsibility, Facebook commits to algorithmically reduce (or down-rank) the distribution of such content, in addition to other steps to mitigate its impact and disincentivize its spread. The company has also developed a policy of removing particular categories of manipulated media that may mislead users; however, the policy is limited in scope. It extends only to media that is the product of artificial intelligence or machine learning and includes an allowance for any media deemed to be satire or content that edits, omits, or changes the order of words that were actually said.

It is worth recognizing that while Facebook’s policies generally apply to all users, the company notes that “[i]n some cases, we allow content which would otherwise go against our Community Standards – if it is newsworthy and in the public interest.” The company has further indicated that speech by politicians will generally be treated as within the scope of this newsworthiness exception, and therefore not subject to removal. Such posts are, however, subject to labeling that indicates that the posts violate the Community Standards. In recent years, Facebook has taken steps to remove political speech and deplatform politicians, including former President Donald Trump in the wake of the January 6th attack on the U.S. Capitol. Following the attacks, Facebook made the decision to remove President Donald Trump’s account from the platform indefinitely. The Oversight Board upheld the deciison, but criticized the open ended nature of the suspension, so Facebook limited the suspension to two years. In 2018, Facebook also de-platformed Min Aung Hlaing and senior Myanmar military leaders for conducting disinformation campaigns and inciting ethnic violence.

2. Twitter Policies

The Twitter Rules govern permissible content on Twitter, and while there is no general policy on misinformation, the Rules do include several provisions to address false or misleading content and behavior in specific contexts. Twitter’s policies prohibit disinformation and other content that may suppress participation or mislead people about when, where, or how to participate in a civic process; content that includes hate speech or incites violence or harassment; or content that goes directly against guidance from authoritative sources of global and local public health information. Twitter also prohibits inauthentic behavior and spam, which is an element of information operations that makes use of disinformation and other forms of manipulative content. Related to disinformation, Twitter has updated its hateful conduct policy to prohibit language that dehumanizes people on the basis of race, ethnicity, and national origin.

Following public consultation, Twitter has also adopted a policy regarding sharing synthetic or manipulated media that may mislead users. The policy requires an evaluation of three elements, including whether (1) the media itself is manipulated (or synthetic); (2) the media is being shared in a deceptive or misleading manner; and (3) the content risks causing serious harm (including users’ physical safety, the risk of mass violence or civil unrest, and any threats to the privacy or ability of a person or group to freely express themselves or participate in civic events). If all three elements of the policy are met, including a determination that the content is likely to cause serious harm, Twitter will remove the content. If only some of the elements are met, Twitter may label the manipulated content, warn users who try to share it or attach links to trusted fact-checking content to provide additional context.

In the context of electoral and political disinformation, Twitter's policies on elections explicitly prohibit misleading information about the voting process. Its rules note "You may not use Twitter’s services for the purpose of manipulating or interfering in elections or other civic processes. This includes posting or sharing content that may suppress participation or mislead people about when, where, or how to participate in a civic process." However, inaccurate statements about an elected or appointed official, candidate, or political party are excluded from this policy. Under these rules, Twitter has removed postings that feature disinformation about election processes, such as promoting the wrong voting day or false information about polling places––addressing content that EMBs election observers and others are increasingly working to monitor and report. It is notable that tweets from elected officials and politicians may be subject to Twitter’s public interest intersectional.

Under their public interest exception, Twitter notes that it “may choose to leave up a Tweet from an elected or government official that would otherwise be taken down” and cites the public’s interest in knowing about officials’ actions and statements. When this exception applies, rather than remove the offending content, Twitter will “place it behind a notice providing context about the rule violation that allows people to click through to see the Tweet.” The company has identified criteria for determining when a Tweet that violates its policies may be subject to this public interest exception, which includes:

- The Tweet violated one or more of the platform’s rules

- Was posted by a verified account

- The account has more than 100,000 followers

- The account represents a current or potential member of a governmental or legislative body:

- Current holders of an elected or appointed leadership position in a governmental or legislative body, OR

- Candidates or nominees for political office, OR

- Registered political parties

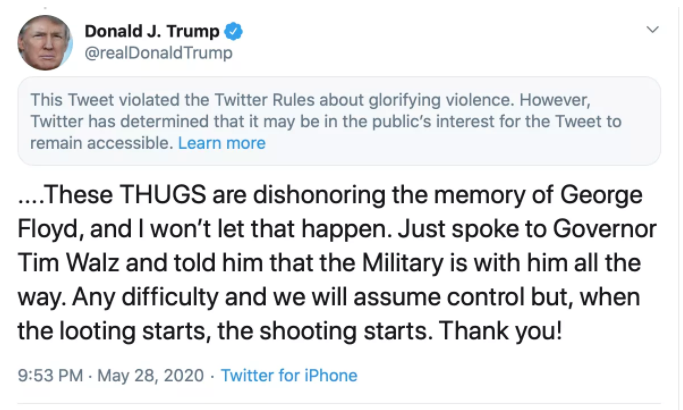

In considering how to apply this exception, the company has also developed and published a set of protocols for weighing the potential risk and severity of harm against the public-interest value of the Tweet. During the 2020 U.S. Presidential Election cycle, Twitter applied the public interest intersectional notice to many of former President Donald Trump’s Tweets. Tweets that fall under this label display the following warning as shown below:

Following the January 6th attack on the U.S. Capitol and Tweets that showed incitement of violence, Twitter de-platformed former President Trump. The company published a blog with its rationale.

Finally, it is also noteworthy that Twitter has demonstrated a willingness to develop policies in response to specific topics that present a risk of polluting the platform’s information environment. For example, the company has implemented a special set of policies for removing content related to the QAnon conspiracy theory and accounts that promulgate it. Since the start of the COVID-19 pandemic, Twitter has also developed policies to counter COVID-related disinformation and misinformation, including COVID-19 misleading information policy that impacts health and public safety.

3. YouTube Policies

YouTube follows a three-strike policy that results in the suspension or termination of offending accounts related to disinformation. YouTube policies include several provisions relevant to disinformation in particular contexts, including content that aims to mislead voters about the time, place, means, or eligibility requirements for voting or participating in a census; that advances false claims related to the eligibility requirements for political candidates to run for office and elected government officials to serve in office; or promotes violence or hatred against or harasses individuals or groups based on intrinsic attributes. In addition, YouTube has also expanded its anti-harassment policy that prohibits video creators from using hate speech and insults on the basis of gender, sexual orientation, or race.

Like other platforms, the rules also include a specific policy against disinformation regarding public health or medical information in the context of the COVID-19 pandemic. As misleading YouTube videos about the coronavirus gained 62 million views in just the first few months of the pandemic, YouTube indicated it removed “thousands and thousands” of videos spreading misinformation in violation of the platform’s policies. The platform reiterated its commitment to stopping the spread of such harmful content.

YouTube has also developed a policy regarding manipulated media, which prohibits content that has been technically manipulated or doctored in a way that misleads users (beyond clips taken out of context) and may pose a serious risk of egregious harm. To further mitigate risks of manipulation or disinformation campaigns, YouTube also has policies that prohibit account impersonation, misrepresenting one’s country of origin, or concealing association with a government actor. These policies also prohibit artificially increasing engagement metrics, either through the use of automatic systems or by serving up videos to unsuspecting viewers.

4. TikTok Policies

In January 2020, TikTok implemented the ability for users to flag content as misinformation by selecting their new ‘misleading information category.’ Owned by Chinese company ByteDance, TikTok has been overshadowed by privacy concerns, as Chinese regulation requires companies to comply with government requests to hand over data. In April 2020, TikTok released a statement regarding the company’s handling of personal information, noting their “adherence to globally recognized security control standards like NIST CSF, ISO 27001 and SOC2," goals towards more transparency, and limitations on the "number of employees who have access to user data.”

While such privacy concerns have loomed large in public debates involving the platform, disinformation is also a challenge that the company has been navigating. In response to these issues, TikTok has implemented policies to prohibit misinformation that could cause harm to users, “including content that misleads people about elections or other civic processes, content distributed by disinformation campaigns, and health misinformation.” These policies apply to all TikTok users (irrespective of whether they are public figures), and they are enforced through a combination of content removals, account bans, and making it more difficult to find harmful content, like misinformation and conspiracy theories, in the platform’s recommendations or search features. TikTok established a moderation policy “which prohibits synthetic or manipulated content that misleads users by distorting the truth of events in a way that could cause harm.” This includes banning deepfakes in order to prevent the spread of disinformation.

5. Snapchat Policies

In January 2017, Snapchat created policies to combat the spread of disinformation for the first time. Snapchat implemented policies for its news providers on the platform’s Discover page in order to combat disinformation, as well as to regulate information that is considered inappropriate for minors. These new guidelines require news outlets to fact-check their articles before they can be displayed on the platform’s Discover page to prevent the spread of misleading information.

In an op-ed, Snapchat CEO Evan Spiegel described the platform as different from other types of social media and many other platforms, saying “content designed to be shared by friends is not necessarily content designed to deliver accurate information.” The inherent difference between Snapchat and other platforms allows them to combat misinformation in a unique way. There isn’t a feed of information from users on Snapchat like there is with many other social media platforms––a distinction that makes Snapchat more comparable to a messaging app. With Snapchat’s updates, the platform makes use of human editors who monitor and regulate what is promoted on the Discover page, preventing the spread of false information.

In June 2020, Snapchat released a statement expressing solidarity with the Black community amid Black Lives Matter protests following the death of George Floyd. The platform said that it “may continue to allow divisive people to maintain an account on Snapchat, as long as the content that is published on Snapchat is consistent with our [Snapchat's] community guidelines, but we [Snapchat] will not promote that account or content in any way.” Snapchat also announced that it would no longer promote President Trump’s tweets on its Discover home page, citing “that his public comments of the site could incite violence.”

6. Vkontakte

While many of the largest platforms have adopted policies aimed at addressing disinformation, there are notable exceptions to this trend. For example, VKontakte is one of the most popular social media platforms in Russia and has been cited for its use to spread disinformation, particularly in Russian elections. The platform has also been cited for its use by Kremlin-backed groups to spread disinformation beyond Russia’s borders, impacting other countries’ elections, as in Ukraine. While the platform is frequently used as a means to spread disinformation, it does not appear that VKontakte is enforcing any policies to stop the spread of fake news.

7. Parler

Parler was created in 2018 and has often been dubbed as the “alternative” to Twitter for conservative voices, largely due to its focus on freedom of speech with only de minimis content moderation policies. This unrestricted, unmoderated speech has led to a rise in anti-Semitism, hate, disinformation and propaganda, and extremism. Parler has been linked to the coordinated planning of the January 6th insurrection at the U.S. Capitol. In the aftermath of this event, multiple services including Amazon, Apple, and Google booted Parler from their platforms due to the lack of content moderation and a serious risk of public safety. This move demonstrates the ways in which the wider marketplace can apply pressure on specific platforms to implement policies to combat disinformation and other harmful content. After discussions with Amazon and Apple, Parler made changes to the app to better detect and monitor hate speech. Google announced they would allow Parler to return if they made changes to the app consistent with Google's policies, but as of September 2021, Parler has still not been added back to the Google Play store.

(B). Technical and Product Interventions to Curtail Disinformation

Private sector platforms have developed a number of product features and technical interventions intended to help limit the spread of disinformation, while balancing the interests of free expression. The design and implementation of these mechanisms are highly dependent on the nature and functionality of specific platforms. This section examines responses across these platforms, including traditional social media services, image, and video sharing platforms, and messaging applications. Of note, one of the biggest issues these platforms have tried and continue to address across the board is virality - the speed at which information travels on these platforms. When virality is combined with algorithmic bias, it can lead to coordinated disinformation campaigns, civil unrest, and violent harm.

1. Traditional Social Media Services

Two of the world’s largest social media companies, Facebook and Twitter, have implemented interventions and features that work either to suppress the virality of disinformation and alert users to its presence or create friction that impacts user behavior to slow the spread of false information within and across networks.

At Twitter, product teams in 2020 began rolling out automated prompts that caution users against sharing links they have not themselves opened; this measure is intended to “promote informed discussion” and encourage users to evaluate information before sharing it. This follows the introduction of content labels and warnings, which the platform has affixed to Tweets that are not subject to removal under the platform’s policies (or under the company’s “public interest” exception, as described above) but which nonetheless may include misinformation or manipulated media. While these labels provide users with additional context (and are examined more thoroughly in the section of this chapter dedicated to such features), the labels themselves introduce a signal and potential friction that may impact a user’s decision to share or distribute misinformation.

Facebook’s technical efforts to curtail disinformation include using algorithmic strategies to “down-rank” false or disputed information, decreasing the content’s visibility in the News Feed and reducing the extent to which the post may be encountered organically. The company also applies these distribution limits against entire pages and websites that repeatedly share false news. The company has also begun to employ notifications to users who have engaged with certain misinformation and disinformation in limited contexts, such as in connection with a particular election or regarding health misinformation related to the COVID-19 pandemic. Although this intervention is in limited use, the company says these notifications are part of an effort to “help friends and family avoid false information.”

Both Twitter and Facebook utilize automation for detecting certain types of misinformation and disinformation and enforcing content policies. These systems have played a more prevalent role during the pandemic as public health concerns have required human content moderators to disperse from offices. The companies similarly employ technical tools to assist in the detection of inauthentic activity on their platforms. While these efforts are not visible to users, the companies publicly disclose the fruits of these labors in periodic transparency reports which include data on account removals. These product features have been deployed globally, including in Sri Lanka, Myanmar, Nigeria, and other countries.

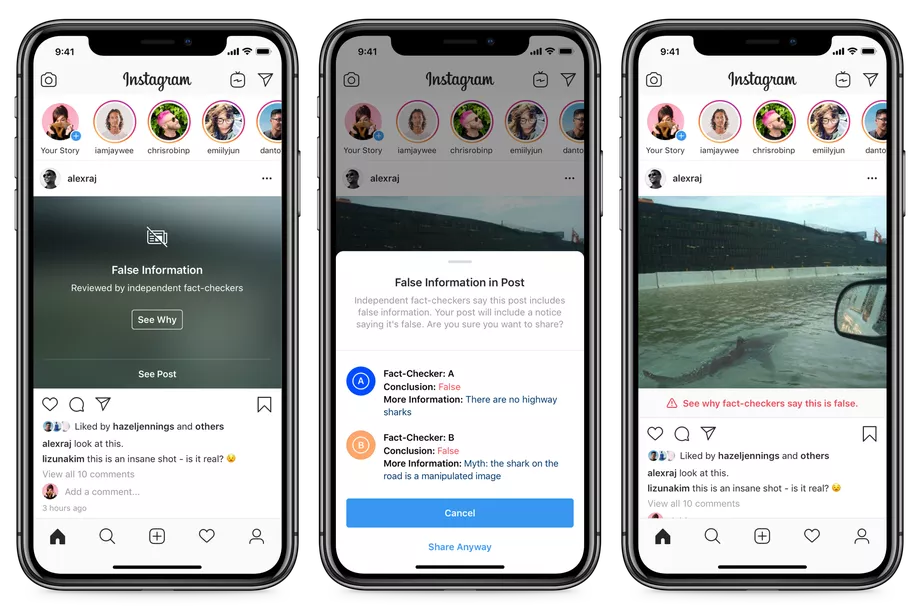

Platforms that share videos and images have also integrated features into their products to limit the spread of false information. On Instagram, a Facebook company, the platform removes content identified as misinformation from its Explore page and from hashtags; the platform also makes accounts that repeatedly post misinformation harder to find by filtering content from that account from searchable pages. Examples of the ways Instagram has integrated curbing dis- and misinformation into their product development is shown below:

TikTok uses technology to augment its content moderation practices, particularly to assist in identifying inauthentic behavior, patterns, and accounts dedicated to spreading misleading or spam content. The company notes that its tools enforce their rules and make it more difficult to find harmful content, like misinformation and conspiracy theories, in the platform’s recommendations or search features.

To support enforcement of its policies, YouTube similarly employs technology, particularly machine learning, to augment its efforts. As the company notes among its policies, “machine learning is well-suited to detect patterns, which helps us to find content similar to other content we’ve already removed, even before it’s viewed.”

2. Messaging Applications

Messaging platforms have proven to be significant vectors for the proliferation of disinformation. The risks are particularly pronounced among closed, encrypted platforms, where companies are unable to monitor or review content.

Despite the complexity of the disinformation challenge on closed platforms, WhatsApp in particular has been developing technical approaches to mitigate the risks. Following a violent episode in India linked to viral messages on the platform being forwarded to large groups of up to 256 users at a time, WhatsApp introduced limits on message forwarding in 2018 –– which prevent users from forwarding a message to more than five people –– as well as visual indicators to ensure that users can distinguish between forwarded messages and original content. More recently, in the context of the COVID-19 pandemic, WhatsApp further limited forwarding by announcing that messages that have been forwarded more than five times can subsequently only be shared with one chat at a time. While the encrypted nature of the platform makes it difficult to assess the impact of these restrictions on disinformation specifically, the company reports that the limitations have reduced the spread of forwarded messages by 70%.

In addition to restricting forwarding behavior on the platform, WhatsApp has also developed systems for identifying and taking down automated accounts that send high volumes of messages. The platform is experimenting with methods to detect patterns in messages through homomorphic encryption evaluation practices. These strategies may help to inform analysis and technical interventions related to disinformation campaigns in the future.

WhatsApp, owned by Facebook, is especially seeking to combat misinformation about COVID-19 as such content continues to go viral. Efforts by the company have helped stop the spread of COVID related dis and misinformation. WhatsApp has created a WHO Health Alert chatbot to provide accurate information about COVID-19. Users can text a phone number to access the chatbot. The chatbot provides basic information initially and allows users to ask questions on topics, including latest numbers, protection, mythbusters, travel advice and current news. This allows users to obtain accurate information and get direct answers to their questions. WhatsApp has provided information through this service to over one million users.

3. Search Engines

Google has implemented technical efforts to promote information integrity in search. Google changed its search algorithm to combat fake news dissemination and conspiracy theories. In a blog post, Google Vice President of Engineering Ben Gomes wrote that the company will “help surface more authoritative pages and demote low-quality content” in searches. In an effort to provide improved search guidelines, Google is adding real people to act as evaluators to “assess the quality of Google’s search results—give us feedback on our experiments.” Google will also provide “direct feedback tools” to allow users to flag unhelpful, sensitive, or inappropriate content that appears in their searches.