The online world plays an increasingly dominant role in shaping the public conversation and driving political events. Concurrently, disinformation, hate speech, and online extremism have seemingly saturated content on social media platforms, their harms compounded by ever more powerful network effects and computational systems. The negative consequences for society present a global challenge impacting every country and nearly all areas of public discourse. Disinformation bolsters authoritarians, weakens democratic voices and participation, targets women and marginalized groups, exploits and exacerbates existing social cleavages, and silences opposition. Across the networked public sphere1 civil society, governments, and the private sector are grappling with these new online threats and working through their own networks and with each other as part of a whole-of-society effort to improve the integrity of our information environment.

While disinformation has long been a challenge to democracy, the digital age necessitates a renewed commitment and fresh urgency to match the scale, speed, and pervasiveness of online information threats. Meaningful access to a healthy information environment is integral to the functioning of free, rights-respecting societies; as such, countering disinformation and promoting information integrity are necessary priorities for ensuring democracy can thrive globally in the next century and beyond.

HOW THE WORK WAS CONDUCTED

This guide is an ambitious effort to take a global look at measures to combat disinformation and promote information integrity––a collaborative examination of what is being done, what is working, and who is doing it. This resource has been developed by the International Foundation for Electoral Systems, the International Republican Institute, and the National Democratic Institute with support from USAID to the Consortium for Elections and Political Process Strengthening, and is intended to serve as a guide for practitioners, civil society, and government stakeholders working to advance information integrity and strengthen societal resilience. The research has been conducted over two years, led by experts from all three organizations. The team conducted research in three countries and the database includes more than 275 entries across over 80 countries in all regions outside of Antarctica, which will be updated and expanded over time. More than a dozen external experts have served as peer reviewers and editors. Due to COVID-19, some research efforts were curtailed. Due to the scope and scale of the challenge, the research is thorough, but not exhaustive. While drafting, actions by social media platforms, governments, civil society actors, and activists continued to evolve. As a result, we intend that this guide should be a living platform with substantive chapters updated annually and the Global Database updated more regularly.

WHAT’S IN THE GUIDE

Highlight

Over two years, CEPPS conducted in-country research in Colombia, Indonesia, and Ukraine. Key actors were interviewed based on their experience developing interventions, their role in the political system as well as their perspective on the information landscape and other related issues. These countries were chosen based on the relevant interventions and programs they have developed, demographic and geographic diversity, risk of foreign intervention, as well as critical recent elections and other political events. Ukraine is on the front lines of information space issues, as a civil war triggered by the Russian invasion of Crimea has created a contested information space, often influenced by the Kremlin. A massive, important Asian democracy, Indonesia has had recent elections in which social media played a critical role, spurring innovative responses from election management bodies and civil society to mitigate the impacts of disinformation and promote a healthy information environment. Colombia represents the final example, a country with both recent elections and a major peace agreement between the government and rebel groups ending a decades-long war. The pact's negotiation, a failed referendum, and finally ratification by the legislature have followed in successive years and provides an important case study of how a peace process and reconciliation are reflected and negotiated online alongside elections and other political events.

Examples and quotes from all three cases are integrated throughout the guidebook to illustrate lessons learned and the evolution of counter-disinformation programming and other interventions. Where possible, links are provided to entries in the Global Database of Informational Interventions. The database is the most robust effort in the democracy community to catalogue funders, types of programs, organizations and descriptions of the project. In addition, topics include quotes from interviews of stakeholders, reviews and analyses of programs, and reports on monitoring and evaluation. Media reports and academic literature focused on impact and effectiveness have also been included. Finally, this guide is intended to be a living document, and the database and the topics will be periodically revised and improved to reflect the ongoing evolution of the online and real world environment.

The topics are divided into three broad categories, examining the roles of specific stakeholder groups, legal, normative and research responses, as well as a crosscutting issues for addressing disinformation targeting women and marginalized groups, and elections. The topics include:

The topics include:

ROLES

- Building Civil Society Capacity to Mitigate and Counter Disinformation looks at various efforts by civil society organizations to combat disinformation and promote information integrity through programs and other initiatives including fact checking, media literacy, online research and a host of other methods.

- Helping Political Parties Protect the Integrity of Political Information explores the impact of disinformation and hate speech campaigns on political parties in developing countries and provides policy recommendations for parties in countering harmful forms of content and promoting positive ones.

- Platform Specific Engagement for Information Integrity explores varying policy, enforcement, and partnership responses by social media platforms (large and small) to address disinformation challenges.

- Election Management Body (EMB) Approaches to Countering Disinformation explores the varying roles that EMBs play in countering disinformation and offers proactive, reactive and collaborative strategies for election authorities to consider.

- Exposing Disinformation through Election Monitoring examines the work and methods of international and domestic monitoring of the information space as a component of election observation.

RESPONSES

- Developing Norms and Standards on Disinformation and Information Integrity Issues provides an overview of global norms and standards that have been developed to counter disinformation that are consistent with human rights.

- Laws, Regulations, and Enforcement Mechanisms explores ways that national legal frameworks governing elections address social media, and provides a resource for lawmakers and international donors considering alterations to their own electoral frameworks.

- Research and Evaluation Tools for countering disinformation explores a variety of research tools that practitioners use to understand threat actors, targets, the information ecosystem, and program impact.

CROSSCUTTING DIMENSIONS

- Understanding the Gender Dimensions of Disinformation explores how disinformation campaigns, viral misinformation and hate speech target and particularly affect women and people with diverse sexual orientations and gender identities by exploiting and manipulating their self-identities. As a result, this section and every other topic include considerations for gender and marginalized groups in programming and other interventions.

The Database of Informational Interventions provides a comprehensive set of interventions that practitioners, donors, and analysts can use globally in understanding and countering disinformation.

9 Big Takeaways

In conducting this analysis and looking at these critical aspects of the problems, the research team has identified key takeaways that should drive disinformation efforts going forward.

UNDERSTANDING DISINFORMATION

Significant work has been done in recent years to conceptually understand and diagnose information disorder. To conceptually ground our analysis, this guide builds its definitions and understanding of the problems and as well as solutions primarily on the work of Data and Society, First Draft and the Oxford Internet Institute's Computational Propaganda Project. These three foundational resources are well-regarded in the broader community analyzing disinformation, as well as for the ways in which their conceptual frames lend themselves to adaptation for practical application.

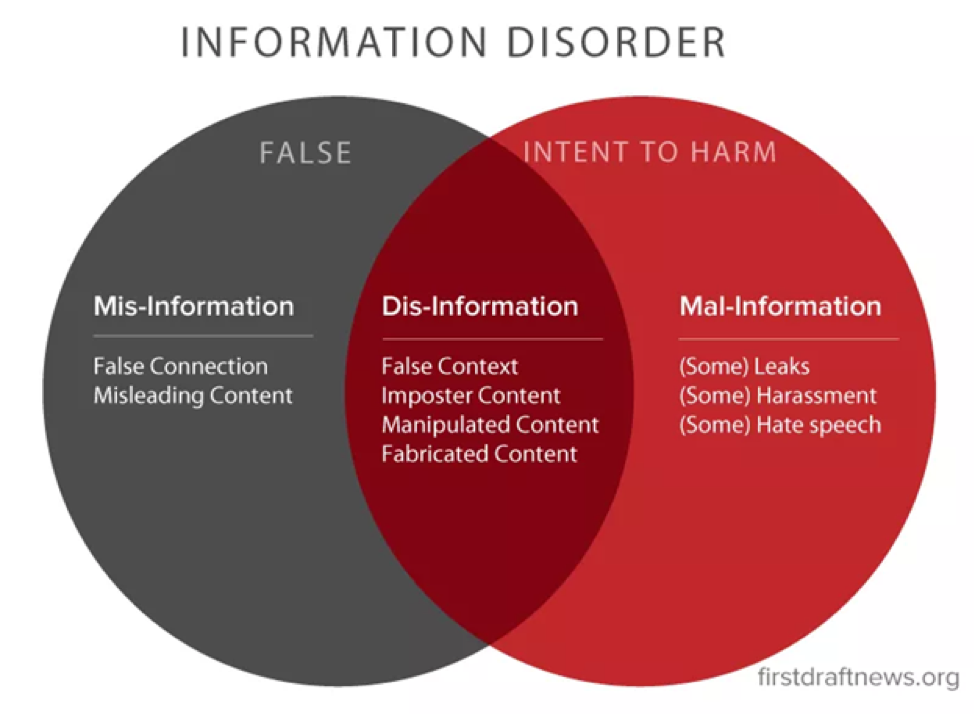

First Draft’s Information Disorder provides clear definitions of information disorder, implications for democracy, the role of television, implications for local media, microtargeting, computational amplification, filter bubbles and echo chambers, and declining trust in the media and public institutions. The framework also describes how misinformation (information passed without the intent to deceive), disinformation (incorrect information passed with intent) and malinformation (true information made public with the intent to harm) are all playing roles in contributing to the disorder, which can also be understood as contributing to the corruption of information integrity in political systems and discourse.

From Wardle, Claire, and Hossein Derakhshan. “Information Disorder: Toward an Interdisciplinary Framework for Research and Policymaking.” Council of Europe, October 31, 2017. https://shorensteincenter.org/information-disorder-framework-for-research-and-policymaking/.

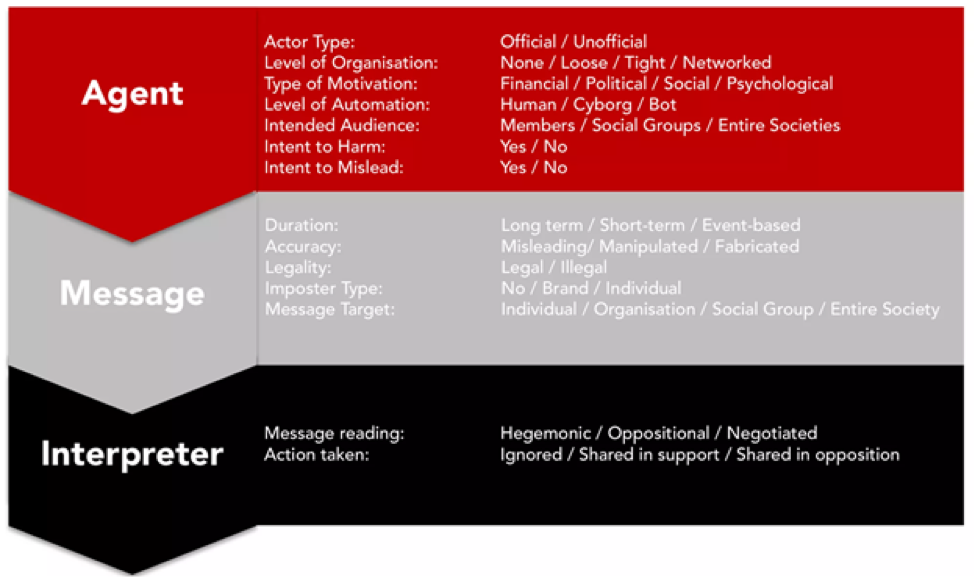

The Information Disorder framework also focuses on elements of the information ecosystem including the information agent (or producer), the message and the interpreter. Messages pass through several phases, namely creation, production, and distribution. These aspects allow for us to interpret different kinds of efforts, whether they focus on one element of these three components, some or even all of them. Legal and Regulatory frameworks and norms and standards can target all of these aspects, and different actors such as platforms, civil society organizations, and governments can design responses that address them in different ways. For instance, media literacy efforts target the interpreters, while content moderation focuses on the messages and agents.

From Wardle, Claire, and Hossein Derakhshan. “Information Disorder: Toward an Interdisciplinary Framework for Research and Policymaking.” Council of Europe, October 31, 2017. https://shorensteincenter.org/information-disorder-framework-for-research-and-policymaking/.

The Oxford Internet Institute (OII) developed the term “computational propaganda” and defines this practice as "the assemblage of social media platforms, autonomous agents, and big data tasked with the manipulation of public opinion."2 This framework allows us to expand our understanding of threats in the online space beyond disinformation to other forms of manipulation online, whether automated or human. It also helps to frame the problem as one including technical, sociological, and political responses. To help understand the virality of disinformation, OII’s work demonstrates how communications, behavioral, and psychological studies––as well as computer, data and information science––all play a role.

Data & Society's Oxygen of Amplification demonstrates how the traditional media play a role in amplifying false narratives, and how it can be manipulated to promote disinformation and misinformation in different ways. Another research group that brings together diverse aspects of media and data analysis as well as social science research also helps define terms and standards. Our glossary relies on Data & Society's report on the Lexicon of Lies3, as well as the First Draft’s Essential Glossary from its study Information Disorder, and other sources that are cultivated through our global database of approaches and other literature, including guidance by USAID4 and other organizations including CEPPS. Technical, media, and communications concepts will be included in the sections and these key terms help describe the problem in a shared way.

Footnotes

1 Benkler, Yochai. The Wealth of Networks: How Social Production Transforms Markets and Freedom. Yale University Press, 2006.

2 Howard, P. N., and Sam Woolley. “Political Communication, Computational Propaganda, and Autonomous Agents.” Edited by Samuel Woolley and Philip N. Howard. International Journal of Communication 10, no. Special Issue (2016): 20.

3 Jack, Caroline. “Lexicon of Lies: Terms for Problematic Information.” Data & Society, August 9, 2017. https://datasociety.net/output/lexicon-of-lies/.

4 Disinformation Primer, Center for Excellence on Democracy, Human Rights and Governance, USAID, February 2021.